We were approached by a prospective client who had been using one of the largest organizations in the country which distributes public service advertising (PSA) campaigns. When they told us the dollar value that this organization had supposedly generated, I was astounded, and after working in the PSA field for more than 40 years, almost nothing astounds me any more.

The values they were claiming were ten times more than the most successful campaign we had ever distributed at the time, and almost all their PSA campaigns achieved these astronomical numbers. The prospective client was quite concerned, because their board had bought into these stratospheric values, and to use another distributor which may not be able to match previous results, would have been a significant problem. This background establishes the need for developing meaningful, credible and objective standards to measure the value of public service advertising.

The PSA Universe

No one knows how many organizations use PSAs as a way to communicate with their stakeholders, but it is a large number.

Nearly every federal agency uses them, along with states, public interest groups, associations, and non-profits, so the aggregate amount being spent on these campaigns is huge. On the media side, back in 2005, the National Association of Broadcasters published a survey that indicated the amount that local TV and radio stations had contributed to various community service campaigns (including on-air promotions, local activities along with PSAs) and that number was $10.5 billion.

That number, as big as it is, tells us almost nothing in terms of how any non-profit can evaluate the worth and impact of their PSA program, and to generate numbers which pass the “snicker test.”

The First PSA Evaluation System

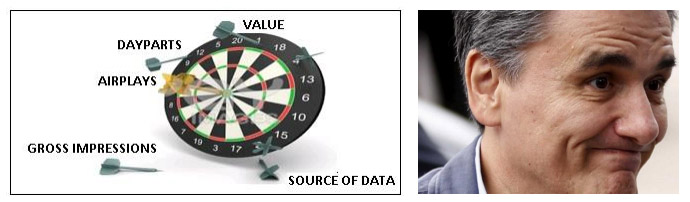

Back in 1983, when I worked as an advertising consultant to the U.S. Coast Guard, we developed the very first PSA evaluation system. It was rudimentary; it was not user friendly; it had to be created each month by a lot of data keypunching, but it was something, and to my mind something was better than nothing. And the source of our data did not come from throwing darts at a board. It came from the Broadcast Advertisers Report, which was the precursor to the Nielsen Spot Track monitoring system, now used by all PSA distributors and PSA clients.

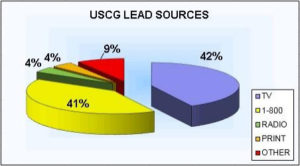

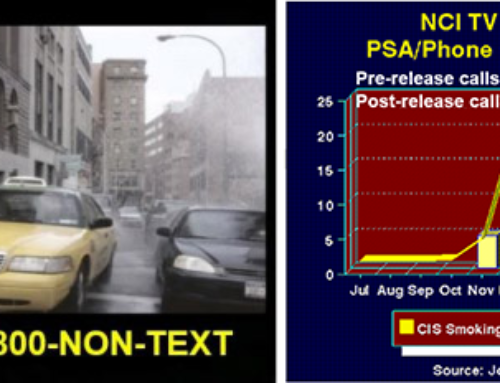

Our goal for developing a PSA tracking system in those days was two-fold. First, we wanted to show how the U.S. Coast Guard’s TV PSAs were stacking up against the other military services which had huge advertising budgets. Our data showed that in some months, we actually generated more exposure than the other military services in spite of our very modest ad budget. And secondly, we wanted to try to measure the impact of the PSAs on the Coast Guard’s recruiting program by showing the media source of leads.

In addition to developing the first PSA evaluation software, we may have also been the first to try and correlate the Coast Guard’s PSAs with their critical mission – using advertising to recruit qualified applicants.

In addition to developing the first PSA evaluation software, we may have also been the first to try and correlate the Coast Guard’s PSAs with their critical mission – using advertising to recruit qualified applicants.

We were able to isolate PSAs from their paid advertising, because the Coast guard bought very selective paid magazine ads, and the rest of our advertising budget was spent exclusively on PSAs. As shown in this graph, 83% of their leads came from PSAs, almost all of which was TV, because when people called the toll-free number, it usually meant they got it from the TV PSAs.

Legitimate PSA Data Sources

Fast forward several decades when submitting a PSA proposal we were asked the question: “Which source do you get your PSA values from – the National Association of Broadcasters or the TV Advertising Bureau?

Our response was neither, because those two organizations do not, and never have provided that kind of data. Yet, we know of one PSA distributor that continues to tell its clients and prospects that it gets its PSA evaluation data from the NAB. When hearing this, my first reaction was: don’t they think this is something easy to check?

Being quite familiar with NAB, I called my contact there to see if they indeed provided such data. “We do not have that kind of data and if we did, we would not give it to external parties,” the staff person at NAB told me.

Being quite familiar with NAB, I called my contact there to see if they indeed provided such data. “We do not have that kind of data and if we did, we would not give it to external parties,” the staff person at NAB told me.

This begs the question, if the PSA distributor which says NAB is their data source, then where are they getting it? A dartboard comes to mind.

Evaluation data is what PSA distributors use to impress their clients with the success of any given campaign. Perhaps more importantly, when PSA distributors all use different methods and sources to arrive at PSA values, it makes it impossible to compare objective results from one distributor to another. If they are not using data from real and reliable sources, then the credibility of the entire PSA “profession” is undermined.

Passing the Snicker Test

The purpose of this article is not to point fingers at any other distributor, because all companies which distribute and evaluate PSA campaigns face the same challenge: how do we get credible evaluation data that passes what we call the “snicker test.”

What brings up the “snicker test” is when you, as the communications director for a major non-profit, are charged with presenting results from your latest PSA campaign. You present the data, which perhaps includes the number of airplays by media type, the time of day when your TV PSAs were used, perhaps the markets where they were used, and then you drop a huge number on them which are referred to as “Gross Impressions.”

As you present your data, the board members seemed to be digesting the PSA airplays, values and other qualitative data, until you mention the Gross Impressions, when their eyes seem to glaze over. One of them starts to twist nervously in his seat and raises his hand….”Uh excuse me, could you please give us more information on the Gross Impressions….what does that mean exactly?

You are ready for the question and you read off the following definition: According to A.C. Nielsen, the definition of Gross Impressions is: “The sum of audiences, in terms of people or households viewing, where there is exposure to the same commercial or program on multiple occasions. It can be calculated by the reach, multiplied by the number of times the ad/commercial will run.”

When you finish your explanation, the board members says: “so you are telling us that if we have Gross Impressions of 700 million, that means every man, woman and child in the U.S. saw our PSAs, or some combination of that audience, twice?” And he snickered when he asked this question.

You, as the staff member who has to defend these huge PSA numbers are mortified, because you cannot defend those huge numbers, and in reality no one else can either.

Advertising Equivalency (AEV) as a Metric

A few years ago, I wrote a blog called “The Case for Advertising Equivalency,” which postulated that using the value of PSA time and space was the best metric for success, and much more meaningful than Gross Impressions, which no one can defend.

A few years ago, I wrote a blog called “The Case for Advertising Equivalency,” which postulated that using the value of PSA time and space was the best metric for success, and much more meaningful than Gross Impressions, which no one can defend.

Yet, in spite of our views, many people in the PR profession – a profession that I spent many years in – feel that advertising equivalency should not be a meaningful part of campaign evaluation. Here is a statement by David Rockland, partner and CEO of Ketchum Pleon Change, one of the largest PR consulting firms in the world.

“Ad value equivalency is conceptually wrong.” He went on to say that “If you can’t recognize it as a bad idea, then you probably shouldn’t be in PR.”

It could be a matter of semantics, because the public service advertising and PR worlds are quite different in the way exposure occurs. However, for purposes of discussion, let’s review Mr. Rockland’s statement more carefully, and try to find out what he and his colleagues at the Institute for Public Relations define as a better way to measure campaign impact.

At the European Summit on PR Measurement held in Barcelona, leaders from 30 countries met to discuss global standards and practices to evaluate public relations programs.

One of the delegates at the conference, Andre Manning, global head of external communications at Royal Philips Electronics, said that his firm has “reworked its PR approach to ‘outcome communications,’ and totally abandoned ad value equivalency.”

My response to this is what leads these PR experts to think that anyone engaged in public service advertising evaluation uses advertising equivalency value (AEV) exclusively as a measurement of success?

Everyone we know who engages in public relations and/or public service advertising programs uses advertising equivalency as just one of the important metrics of measuring campaign outcome.

But why use it at all is the question that is being raised. We offer several reasons.

- First, AEV inherently reflects many different aspects of media values in the way it is calculated. For example, in calculating the AEV for broadcast TV exposure, the size of the market, (as defined by population), the prominence of the station within the market in terms of audience size, the time of day the exposure occurred, the length, duration and frequency of the message are all reflected by the AEV.

- Secondly, everyone can do the math. They know if they spent X dollars to create and distribute a campaign, and got back Y in ad equivalency value, then they know their Return-On-Investment or ROI. No board member will snicker if you give them an impressive ROI, because they use these numbers in their own businesses, and it is something you can defend.

Where the PR experts quoted previously and we agree, is that there is no single determinant of a campaign’s success. Every non-profit organization has to define what marketing objective is important to them, and if their PSA campaign is, or is not, helping them achieve that objective.

In our world of PSA evaluation:

- There would be several factors to determine the success of a campaign.

- There would be one factor that would never see the light of day in any future evaluation reports:

Success Metrics:

- Did the campaign generate a reasonable return on your investment? We use benchmarking to demonstrate how any given client campaign compares to a standard. Or another way is to simply divide the total cost of the PSA campaign into the advertising equivalency value and establish an ROI ratio.

- Did the campaign help you achieve your critical mission, which could range from getting people to visit your website, encourage volunteers to join your organization, or increase awareness of your issue?

- Are you using only Gross Impressions to define success? If so, we believe that is using a single metric to determine the impact of your PSA program instead of taking a holistic approach. Gross Impressions become a huge, meaningless number that no one can defend, and even if you did, it begs the question: what does this have to do with my organization’s critical mission?

By going to this article we outline in further detail the different ways you can use PSA evaluation data to determine the success of your PSA program. www.psaresearch.com/how-you-can-use-evaluation-data-to-fine-tune-your-psa-program/

Bill is the founder of Goodwill Communications, Inc. a firm which distributes and evaluates national PSA campaigns for non-profits and federal agencies.

Leave A Comment